The cybersecurity landscape has been dramatically reshaped by the advent of generative AI. Attackers now leverage large language models (LLMs) to impersonate trusted individuals and automate these social engineering tactics at scale.

Let’s review the status of these rising attacks, what’s fueling them, and how to actually prevent, not detect, them.

Recent threat intelligence reports highlight the growing sophistication and prevalence of AI-driven attacks:

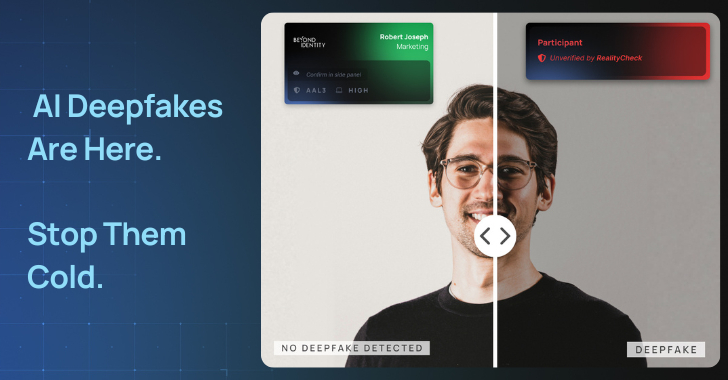

In this new era, trust can’t be assumed or merely detected. It must be proven deterministically and in real-time.

Three trends are converging to make AI impersonation the next big threat vector:

And while endpoint tools or user training may help, they’re not built to answer a critical question in real-time: Can I trust this person I am talking to?

Traditional defenses focus on detection, such as training users to spot suspicious behavior or using AI to analyze whether someone is fake. But deepfakes are getting too good, too fast. You can’t fight AI-generated deception with probability-based tools.

Actual prevention requires a different foundation, one based on provable trust, not assumption. That means:

Prevention means creating conditions where impersonation isn’t just hard, it’s impossible. That’s how you shut down AI deepfake attacks before they join high-risk conversations like board meetings, financial transactions, or vendor collaborations.

| Detection-Based Approach | Prevention Approach |

|---|---|

| Flag anomalies after they occur | Block unauthorized users from ever joining |

| Rely on heuristics & guesswork | Use cryptographic proof of identity |

| Require user judgment | Provide visible, verified trust indicators |

RealityCheck by Beyond Identity was built to close this trust gap inside collaboration tools. It gives every participant a visible, verified identity badge that’s backed by cryptographic device authentication and continuous risk checks.